Multi-Cloud Architectures for the Enterprise

Exploring the complexities and strategies for implementing multi-cloud architectures in enterprise environments, covering both infrastructure and platform services.

These days, many enterprises are opting for a multi-cloud strategy. At my previous employer, I'd worked with AWS for 10 years, I've just recently moved into a new role with a Microsoft partner who naturally recommends Azure for a lot of workloads (even though AWS claim they are the better platform for Windows workloads).

For enterprises, running multiple workloads across different cloud providers may be for a number of reasons; some voluntary or to take advantage of a larger feature set, others because of legislated compliance or risk mitigation strategies. The workloads can be anything from internal systems to customer-facing digital products. So what can a multi-cloud architecture look like? I'm going to break this down into two different categories:

- Infrastructure Services: more traditional type services such as networking, compute, database

- Platform services: services which can be consumed solely by APIs

Services consumed by APIs are far easier to implement given you can integrate the SDK for these services almost anywhere. Infrastructure services are far more complex as you have to often consider the underlying networking layer to access and consume these services.

Infrastructure Services

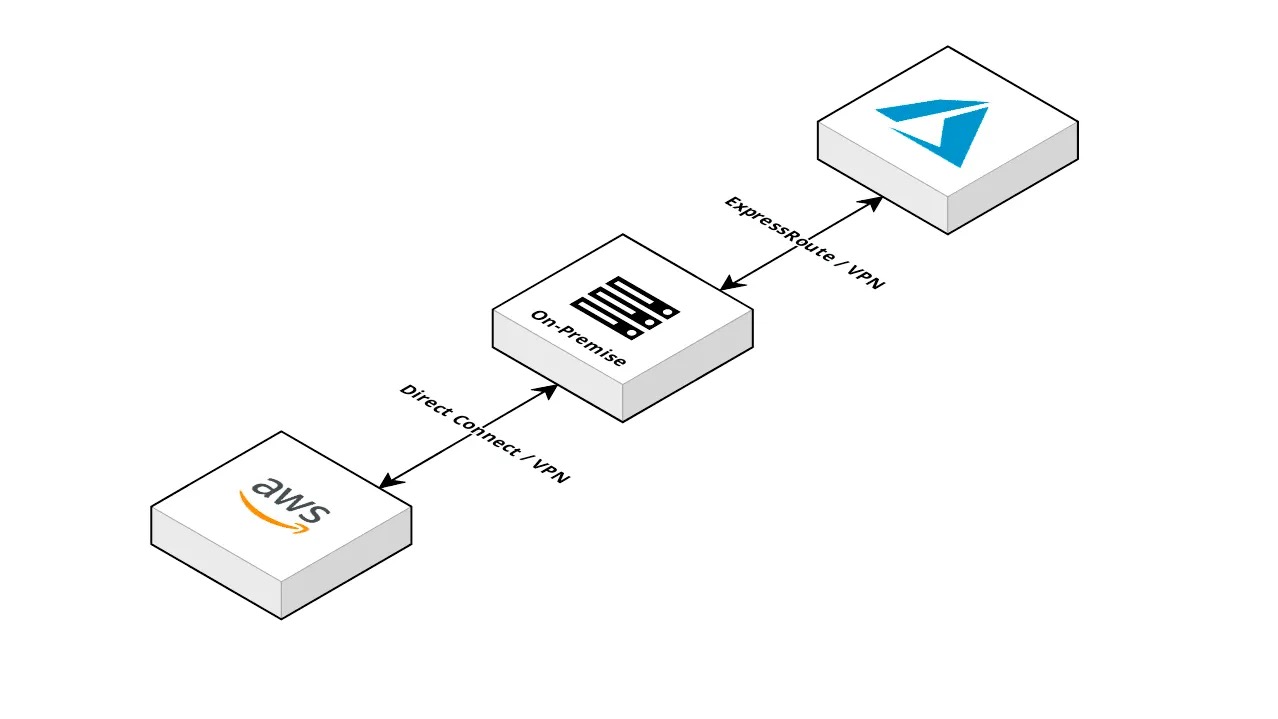

For enterprises, one of the first workloads they may decide to offload is their internal datacentre or mission-critical internal applications. Often, these types of migrations will form an extension of the corporate network or datacentre via a Direct Connect (AWS), Express Route (Azure) or IPSec VPN (both). In the majority of implementations I've seen, network traffic between cloud providers will still often traverse the original network, which can often be seen as a single point of failure.

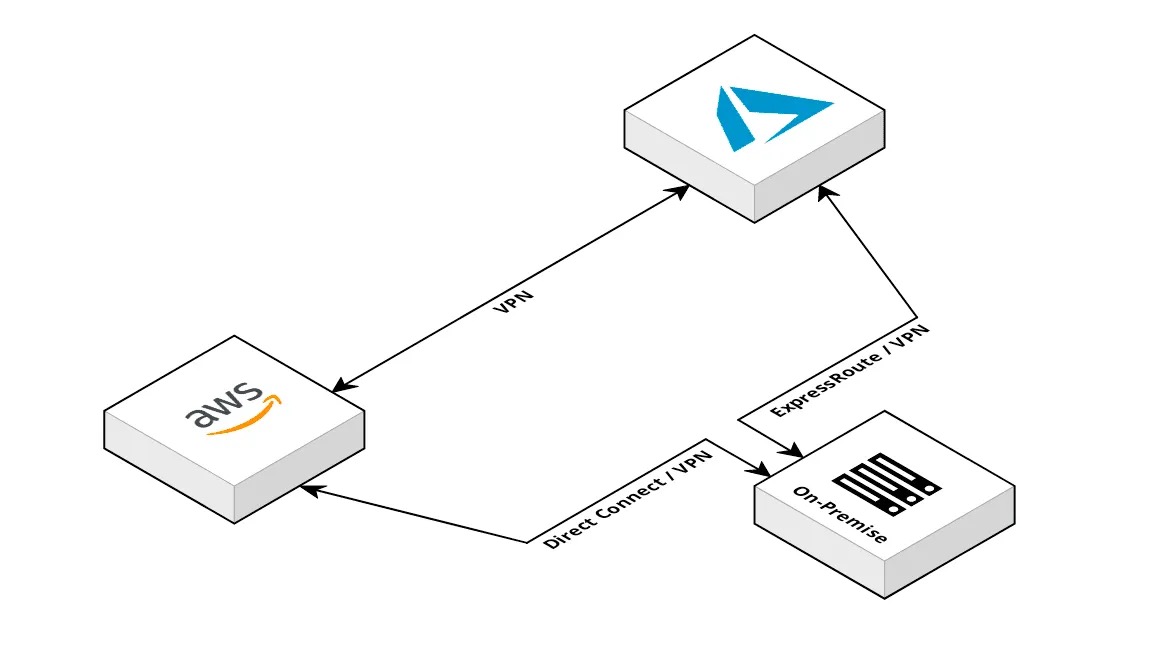

Instead, let's remove the reliance on a single component and change the design of the network to look something like below. Both providers are still connected to the on-premise network via their pre-existing methods and the on-premise network is no longer a critical component to the overall architecture.

The great news is we can create an AWS to Azure VPN connection with all native services on both sides. We'll need:

AWS

- VPC, Subnets, Route Table, Internet Gateway, Customer Gateway, Virtual Private Gateway and Site-to-Site VPN Connection

Azure

- VNet, Subnet(s), Public IP, Local Network Gateway and Virtual Network Gateway

Depending on the complexity of your network, you may need to take multiple on-premise locations into account — but let's start with something relatively simple, a single on-premise location. You'll need to find two IP ranges which currently aren't in use in the network to assign to an Azure VNet and AWS VPC, I'm a fan of using a subnet mask of /16 (255.255.0.0) on my VPCs and VNets as it gives a fairly clean range, and a large number of IP addresses, e.g., 172.16.0.1–172.16.255.254.

Now, both AWS and Azure (in some regions) have the concept of Availability Zones. This example is going to be in AWS' ap-southeast-2 and Azure's Australia East regions — Azure does not yet support Availability zones in this region.

On AWS, I'm going to divide my CIDR up into 3 subnet ranges, each as a /20. In a real-world scenario, you would take this further and break your subnets up and have separate public and private subnets.

On Azure, I'm going to assign a /17 CIDR to a single subnet, this will then leave a /24 subnet available for a gateway subnet. Again, in a real-world scenario, you would choose to break up your subnets and optimize the utilization of your subnets.

AWS Network Configuration: 172.16.0.0/16

- Subnet 1 (2a): 172.16.0.0/18

- Subnet 2 (2b): 172.16.64.0/18

- Subnet 3 (2c): 172.16.128.0/18

Azure Network Configuration: 172.17.0.0/16

- Subnet 1: 172.17.0.0/17

- Gateway Subnet: 172.17.128.0/24

As setting up VPCs and VNets is relatively straight forward I'm not going to walk through that process here and will skip right to the VPN configuration. There is a bit of back and forth to get this to work between the two providers, please bear with me!

Azure Configuration

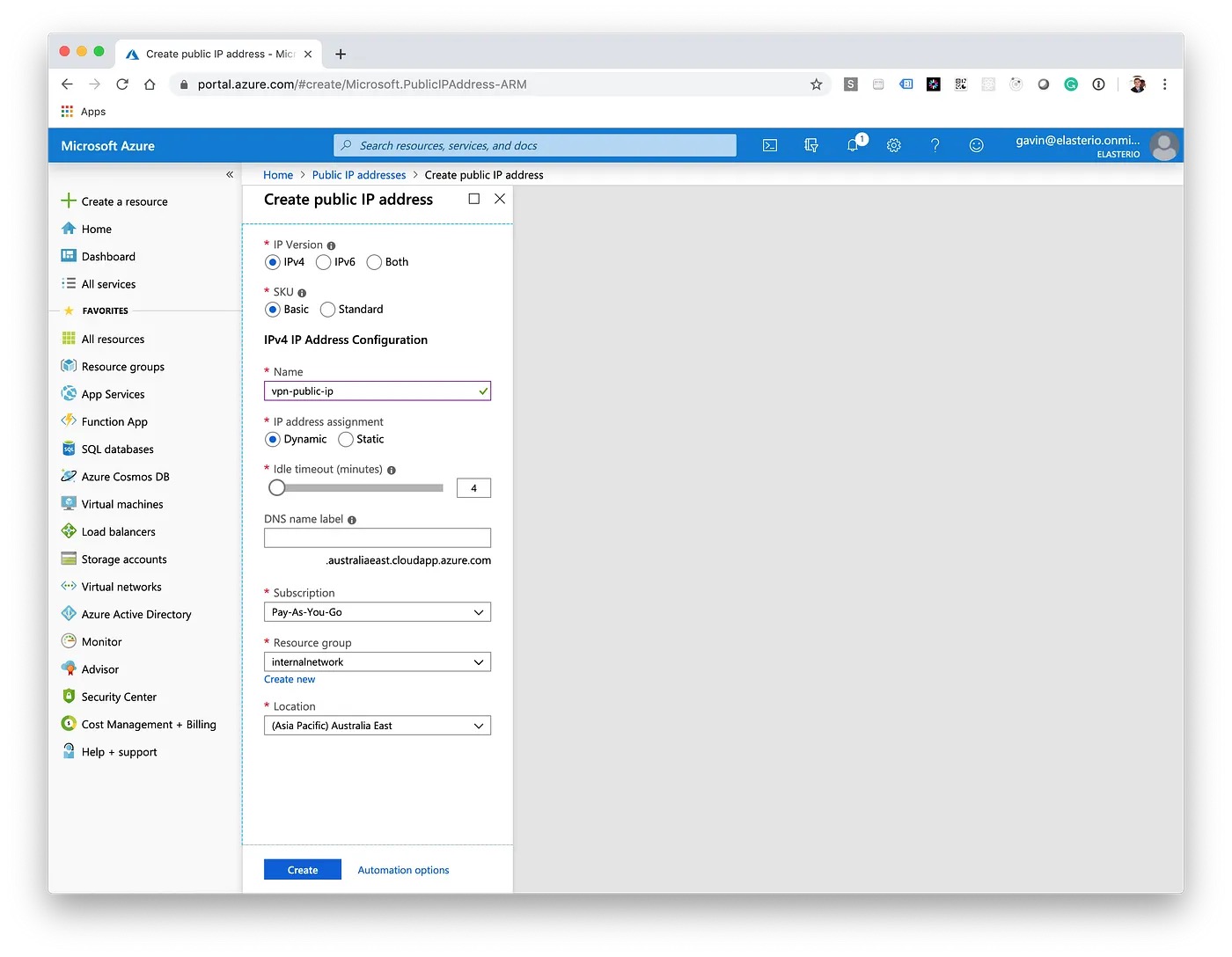

First, we'll create a public IP address which will ultimately get assigned to the Virtual Network Gateway.

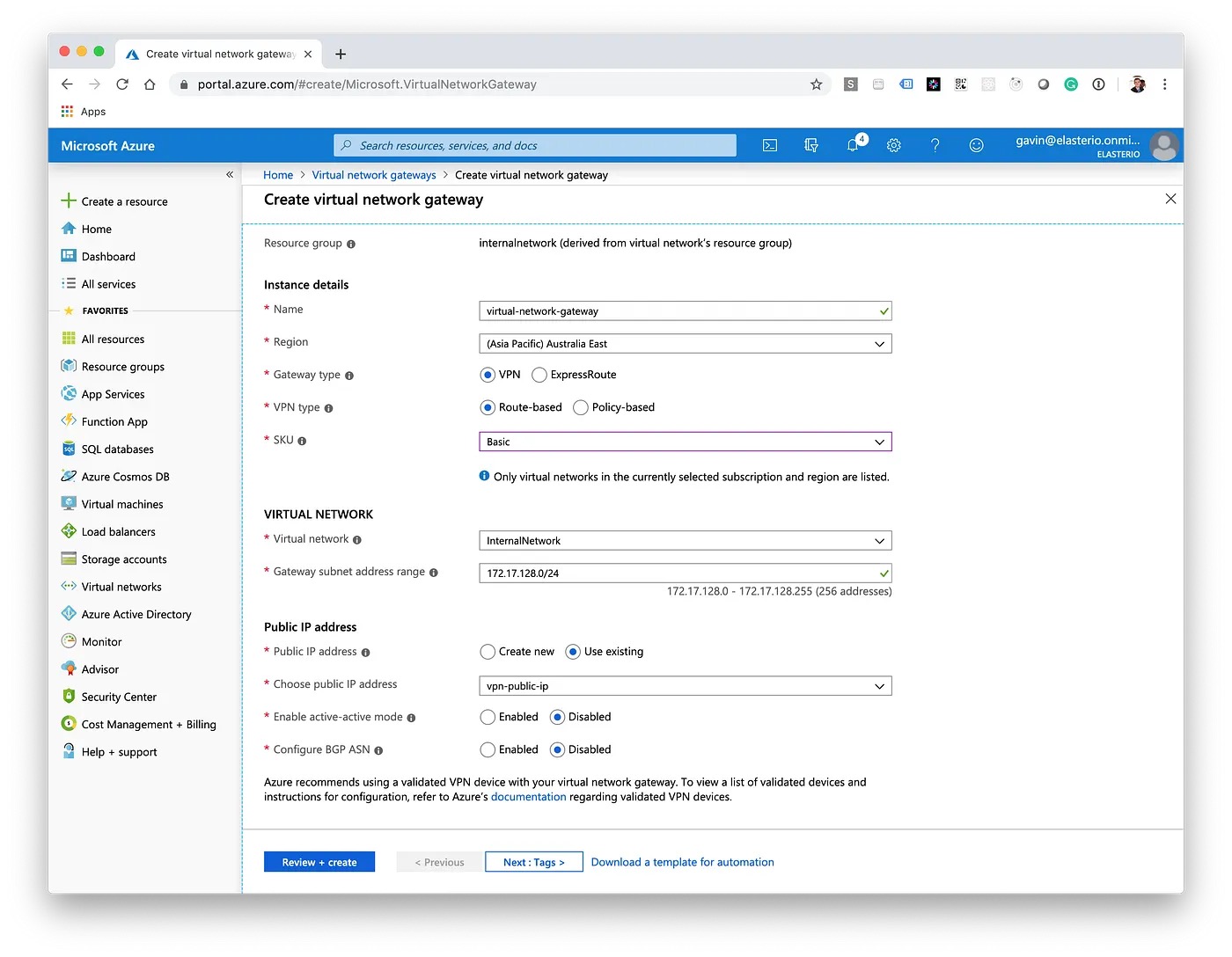

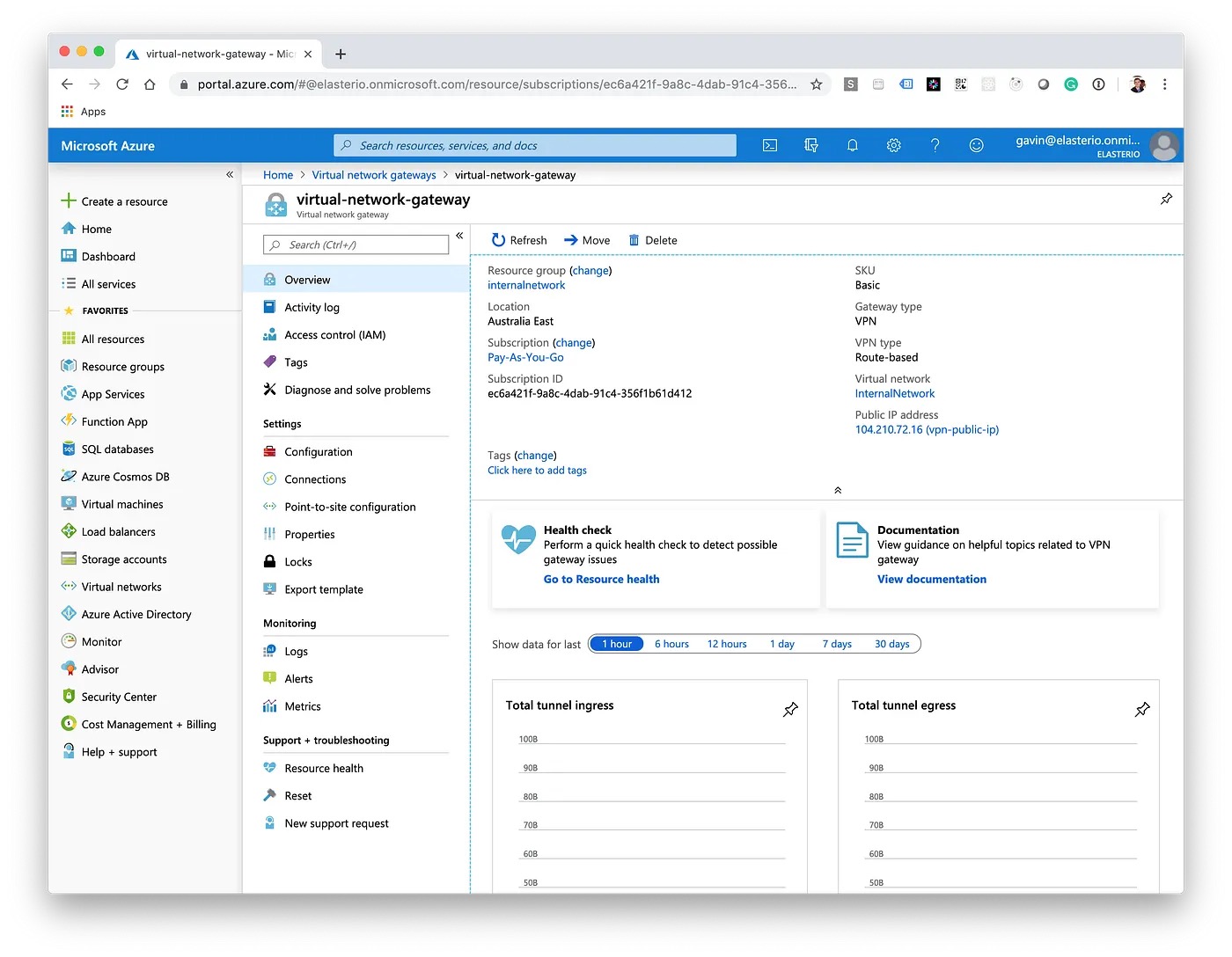

Next, we'll create a Virtual Network Gateway. It's worth researching the SKUs as there are different features and throughput amounts available. Remember to select the Public IP Address which was created earlier.

A Virtual Network Gateway may take anywhere from 30–60 minutes to create, don't worry if it appears nothing is happening, just keep checking back in on the deployment progress. Once it has completed, note down the Public IP Address for the next steps in AWS.

AWS Configuration

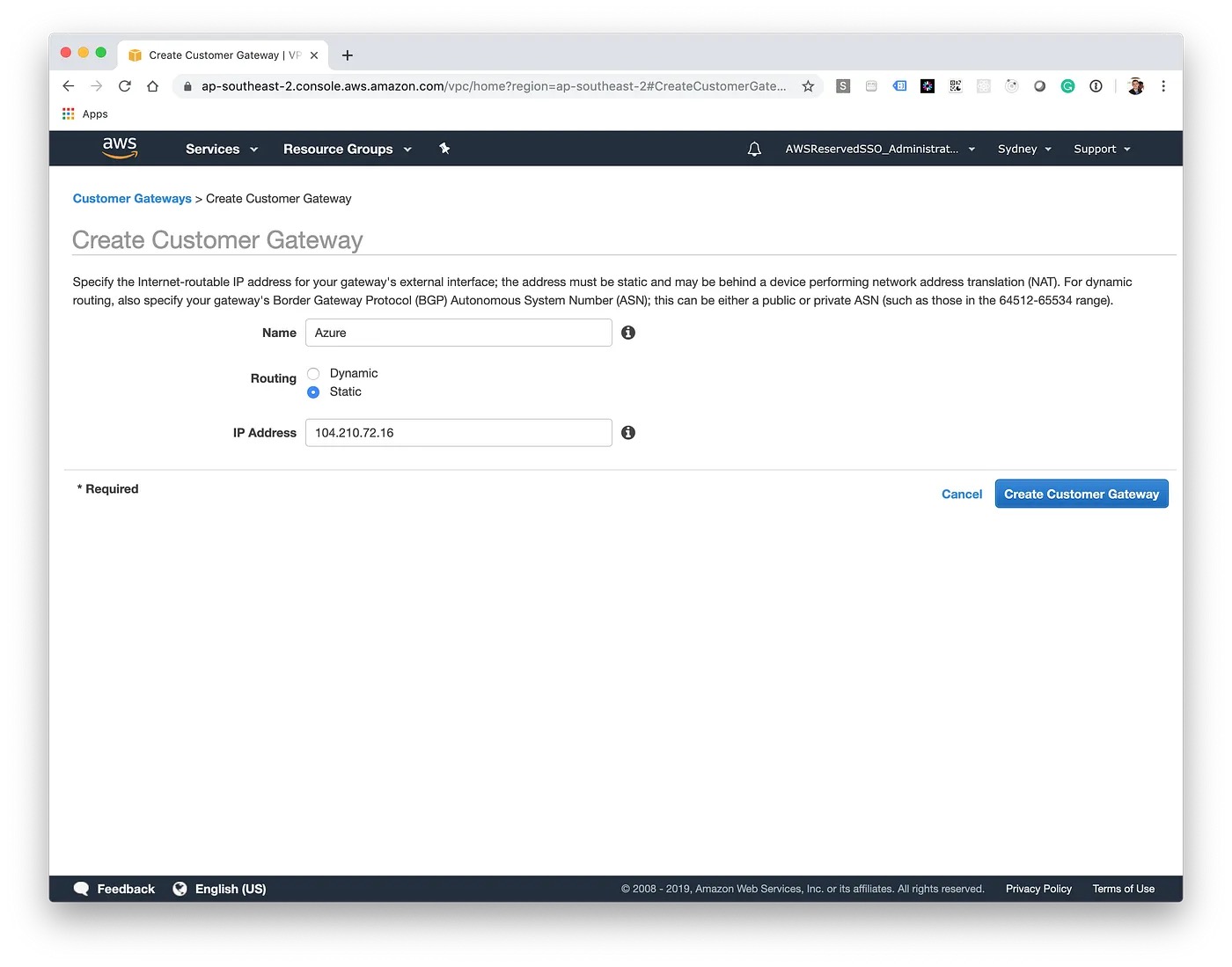

In AWS, we'll now create a Customer Gateway. In this case, the Customer Gateway is Azure, we'll need to enter in the Public IP Address noted down in the last step.

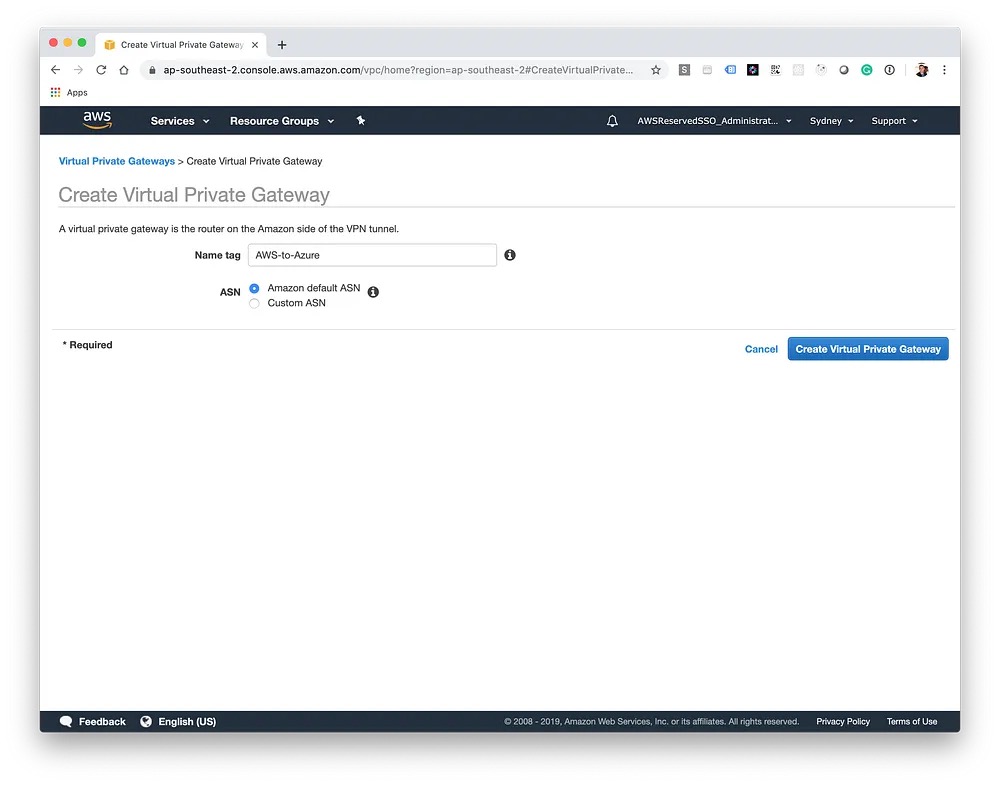

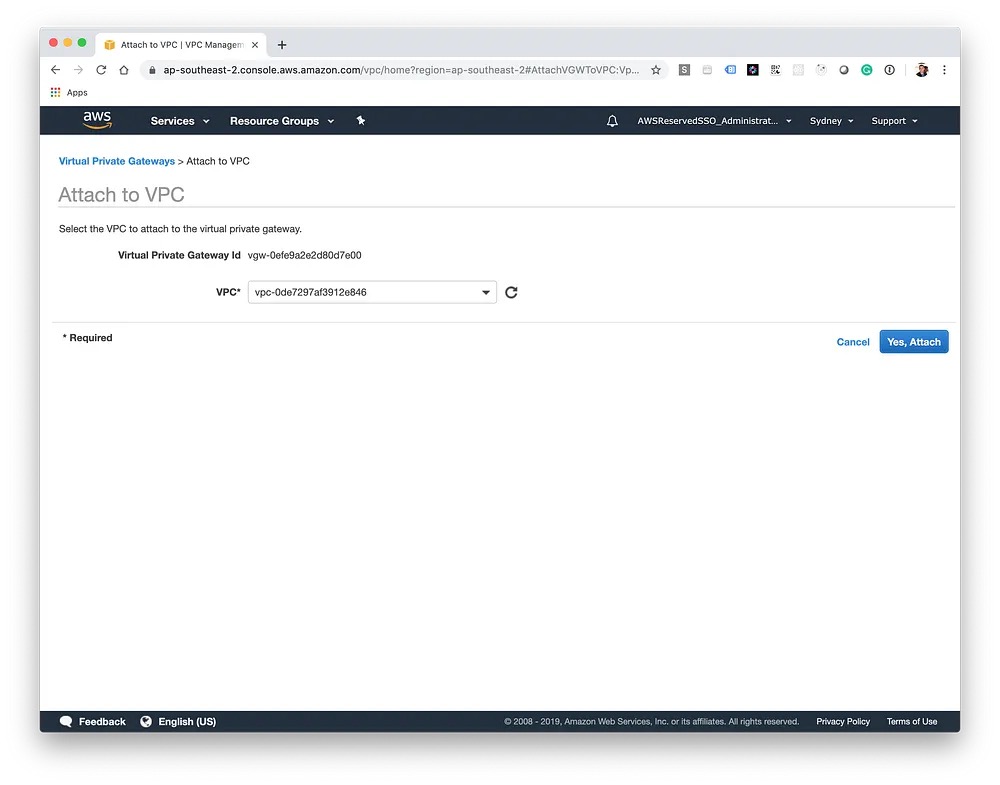

Next, we'll create the Virtual Private Gateway, this is the gateway on Amazon's end which will allow us to route traffic to the remote network. Once created, it also needs to be attached to our VPC — this can take a few moments.

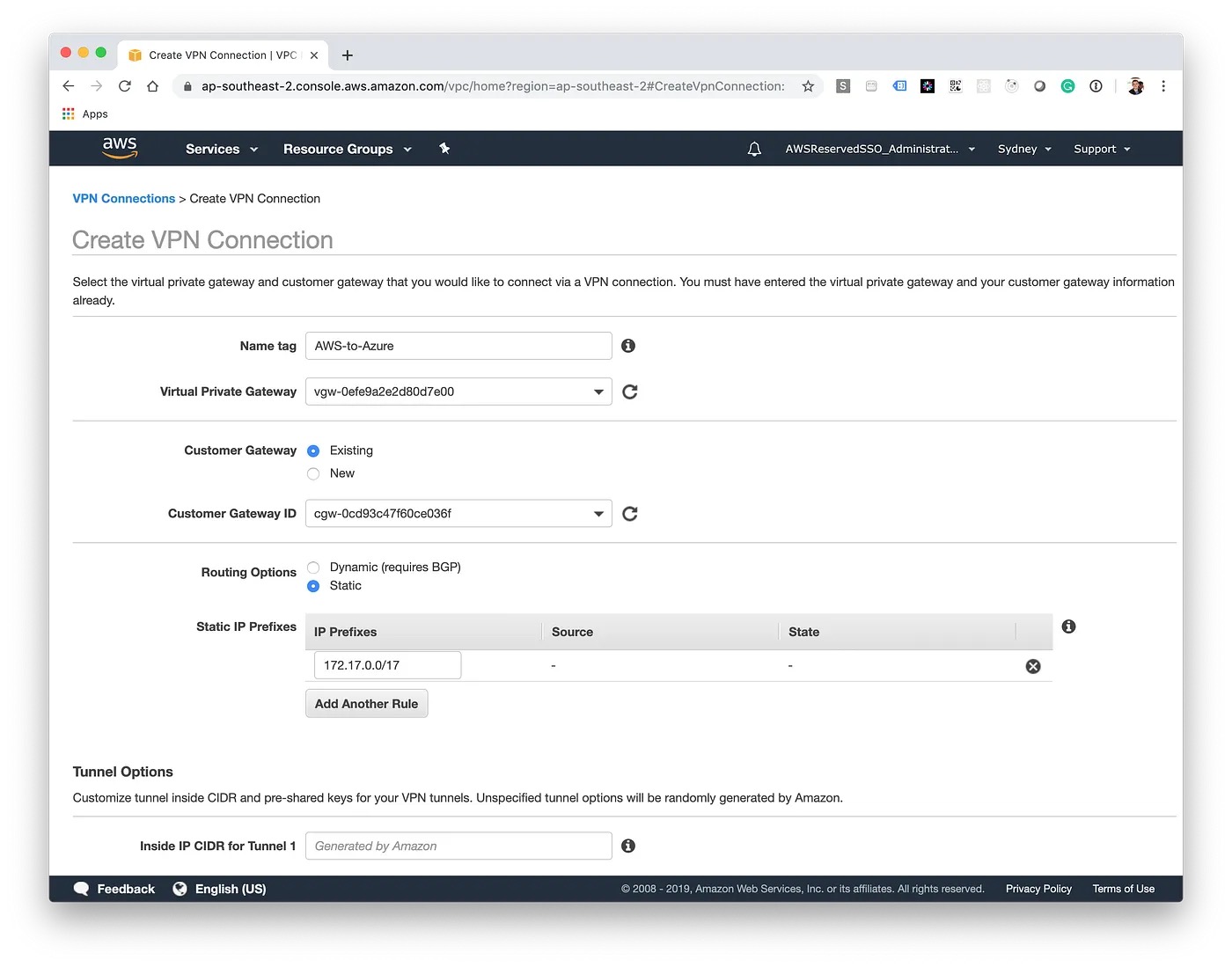

Lastly, we want to initiate the VPN connection from AWS to Azure. To do this, we need to create a new Site-to-Site VPN Connection. We'll select our newly created gateways and then enter in the IP address range of Azure.

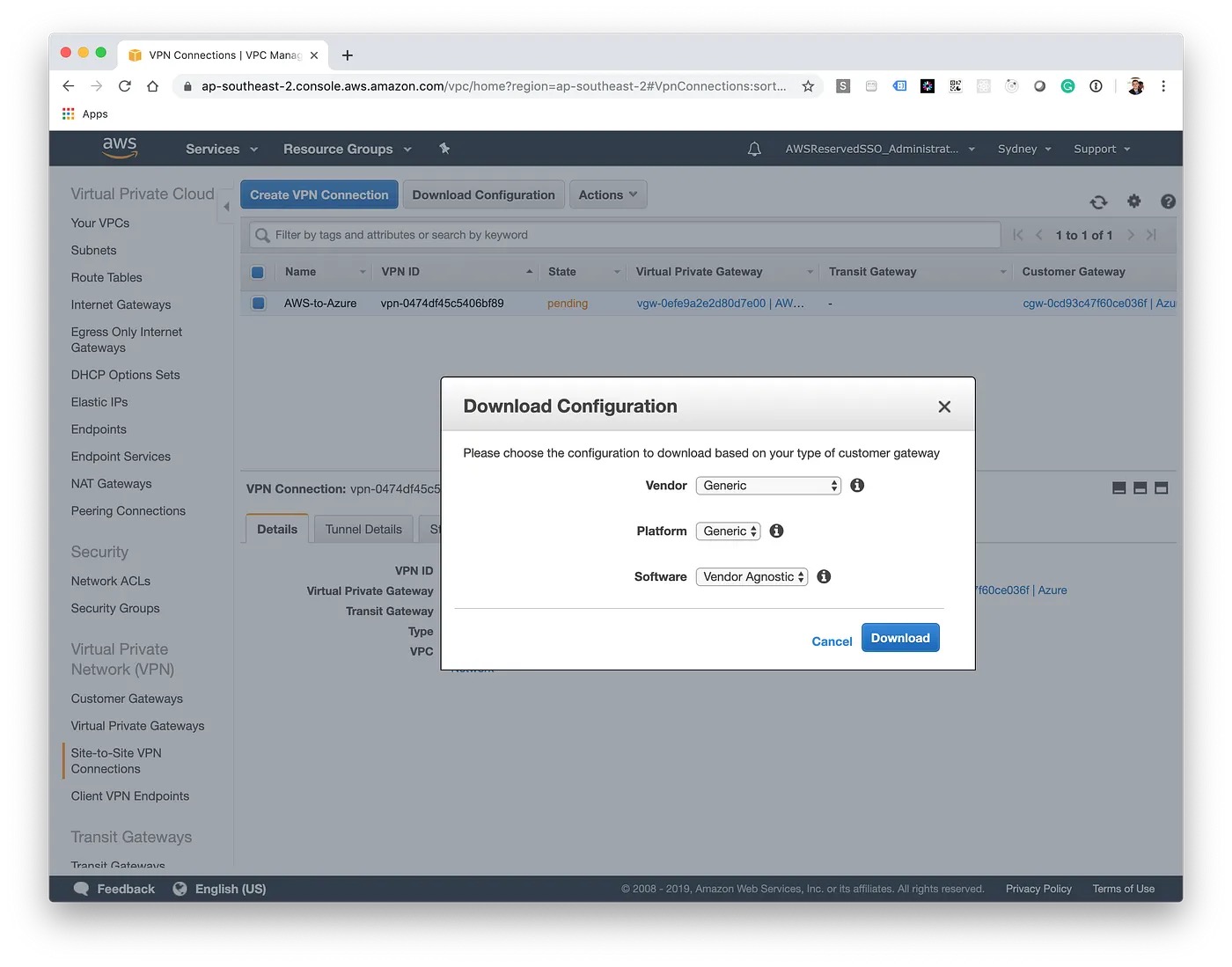

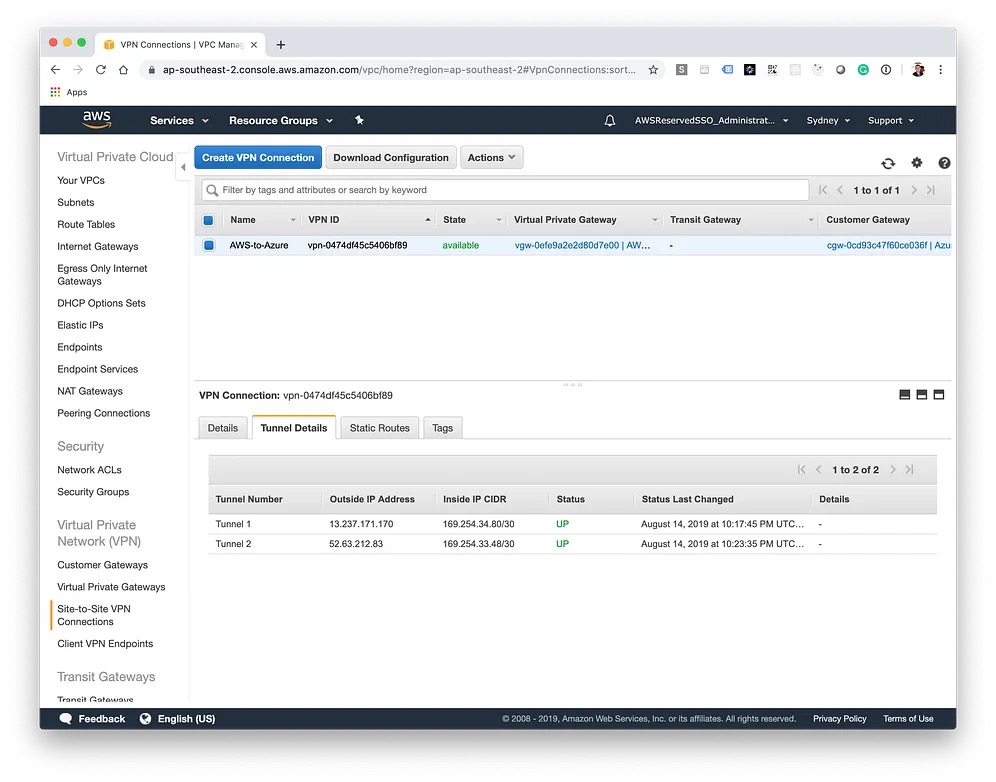

Before we head back to Azure, download the generic configuration details and save them for the next step. This contains information such as the Public IP Addresses, shared keys and local tunnel addresses.

Completing Azure Configuration

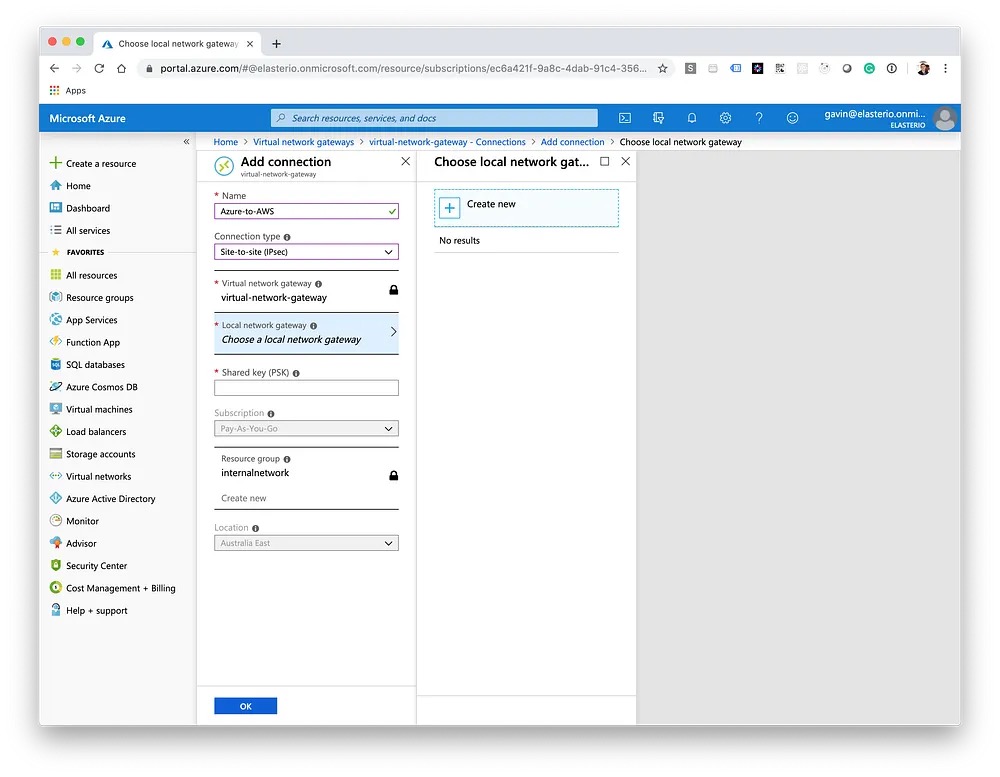

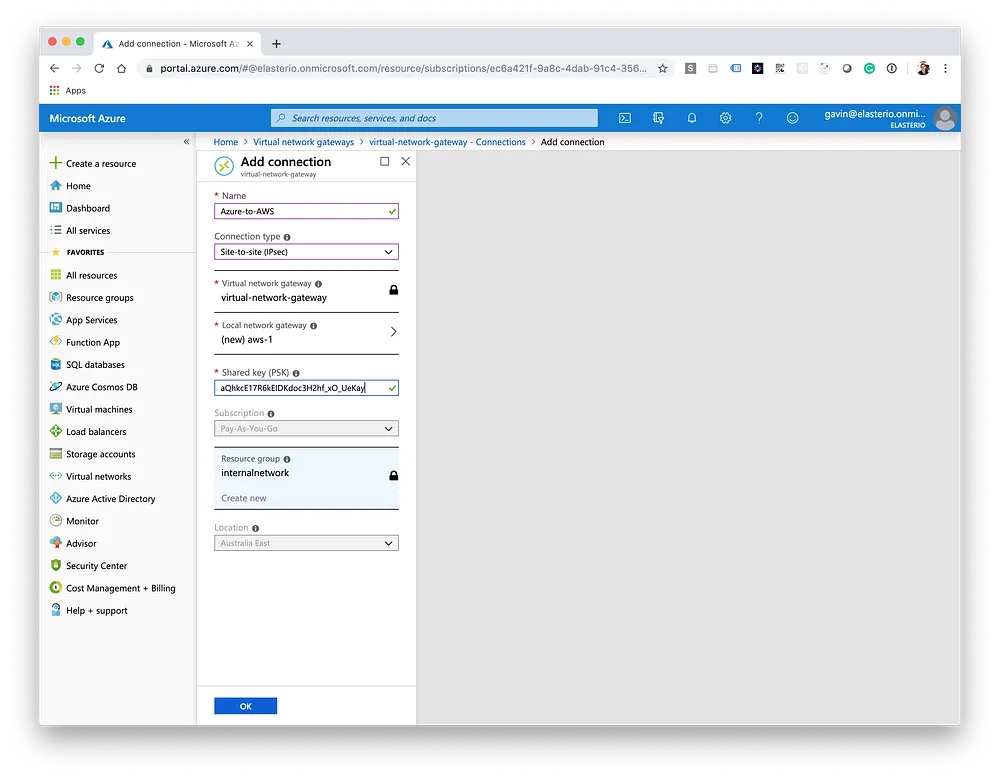

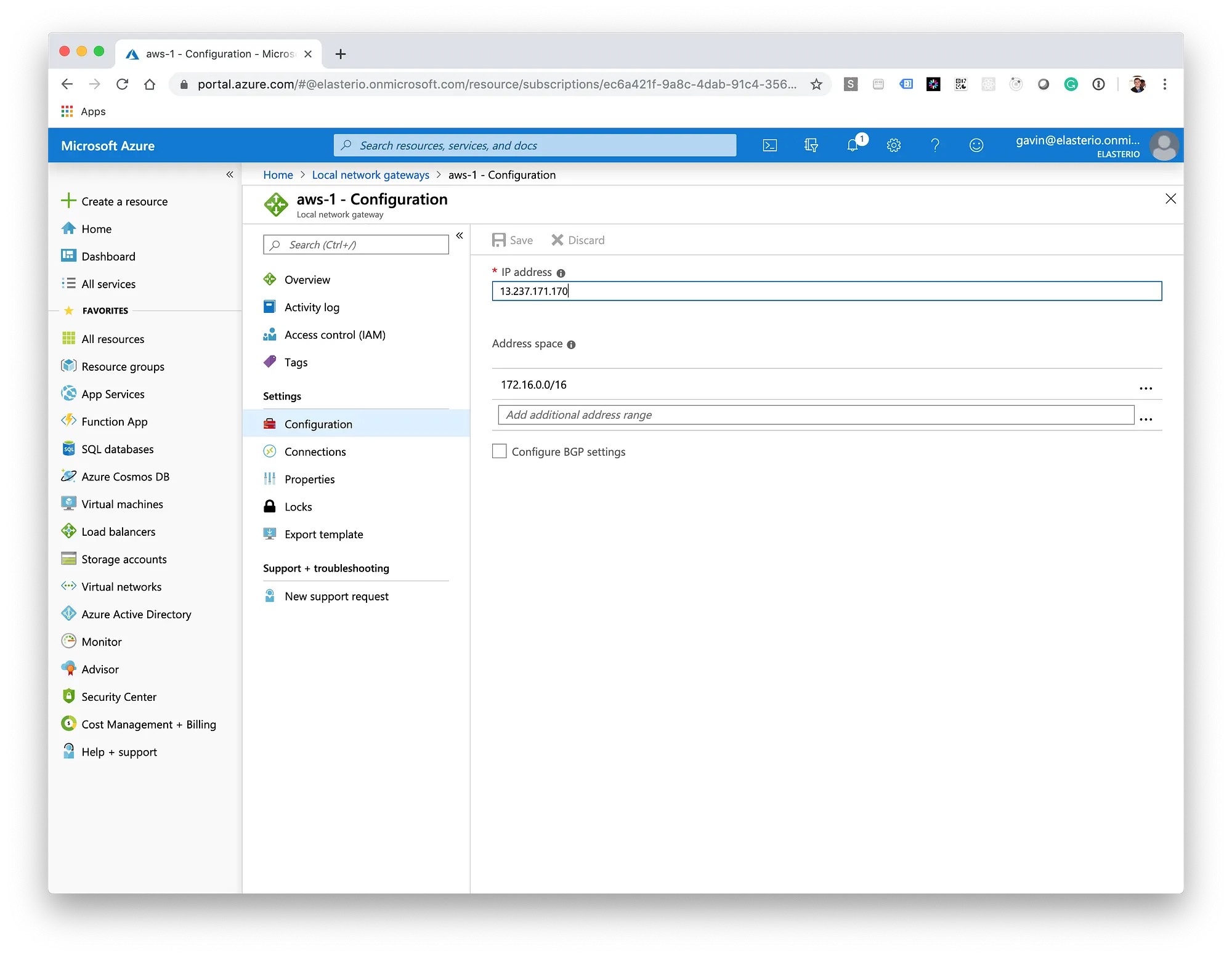

Back in Azure, navigate to your Virtual Network Gateway and choose Connections. We need to create the first of the two Local Network Gateways, one for each of the AWS tunnels referenced in the configuration file in the previous step.

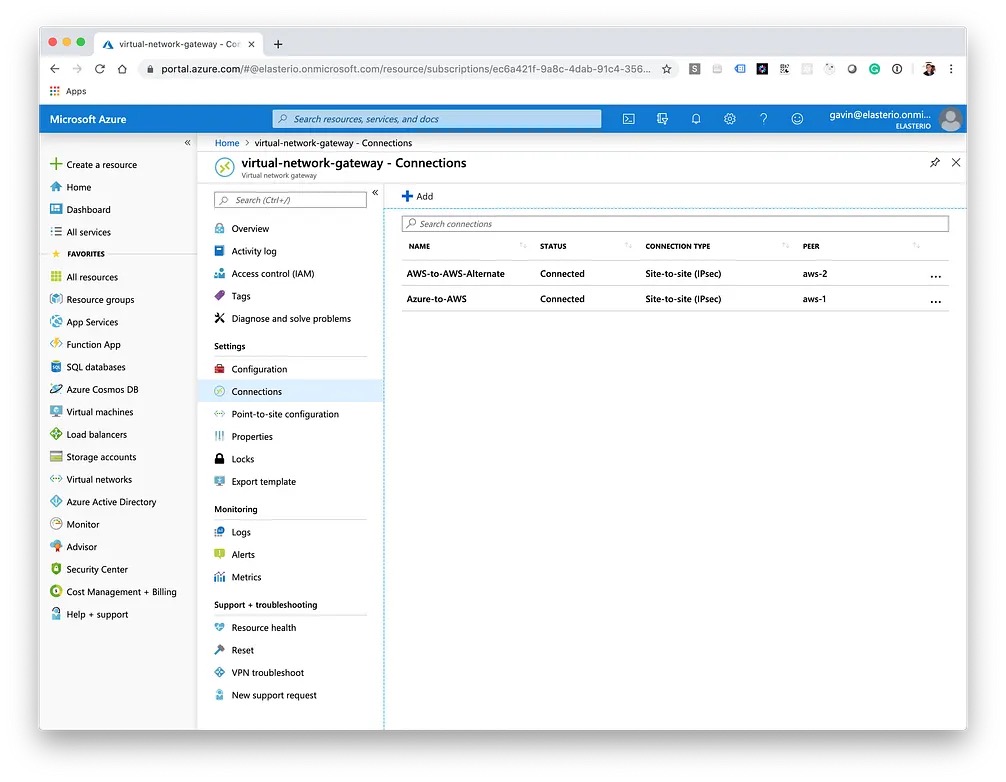

Following the same steps as above, create the second Local Network Gateway with the secondary AWS tunnel details. After a few minutes, you should see the status as "UP" in AWS and "Connected" in Azure for both of the tunnels.

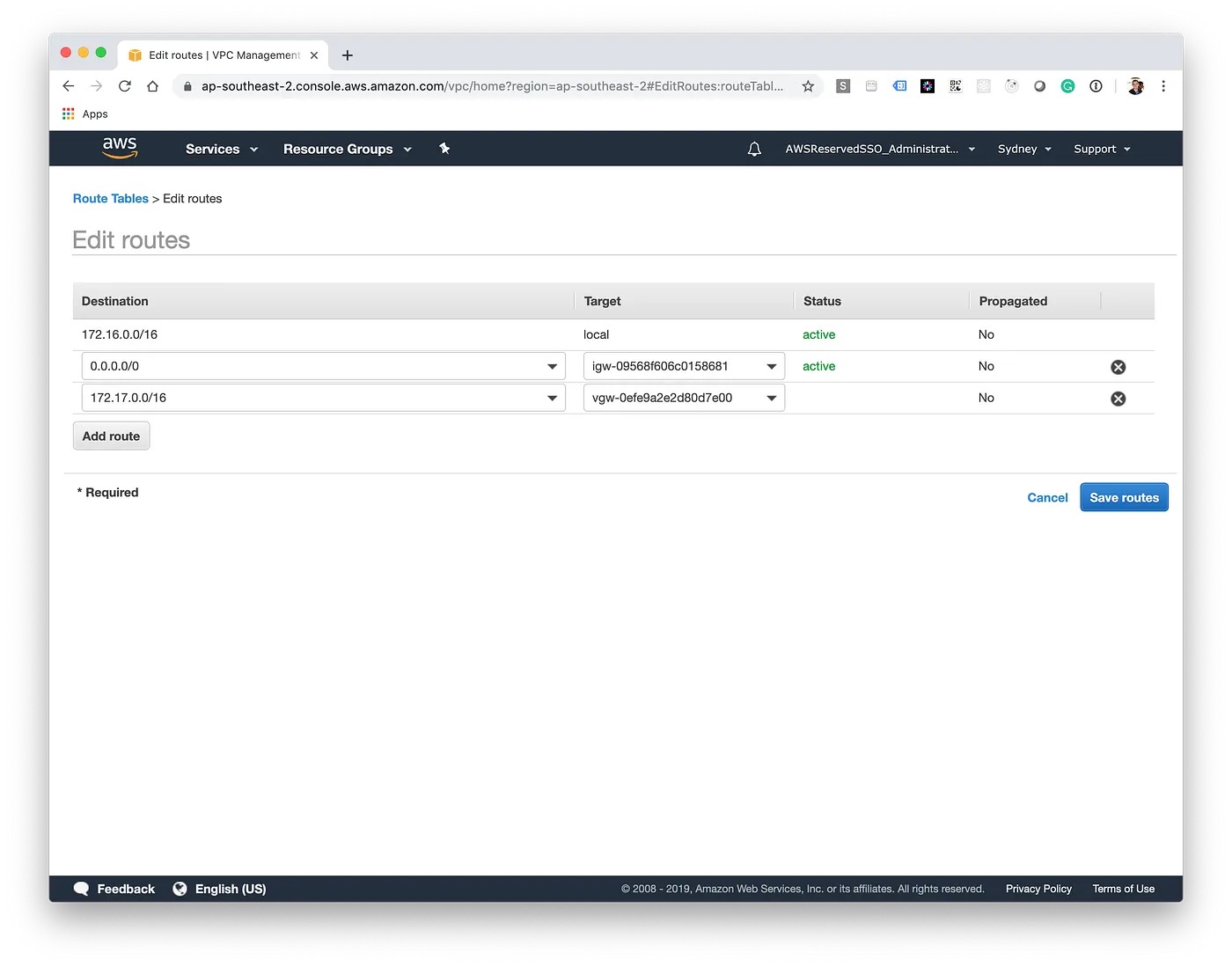

Now we need to create route table entries in AWS so it knows where to route traffic for the Azure network as it needs to go via our Virtual Private Gateway. Azure does this automatically behind the scenes for us.

Testing

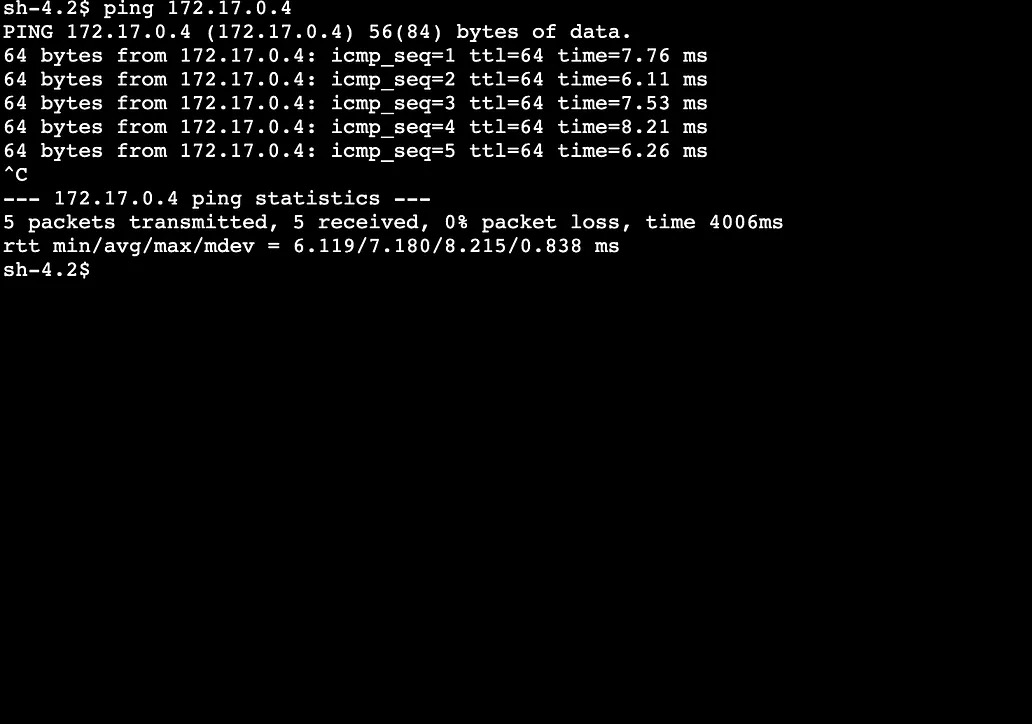

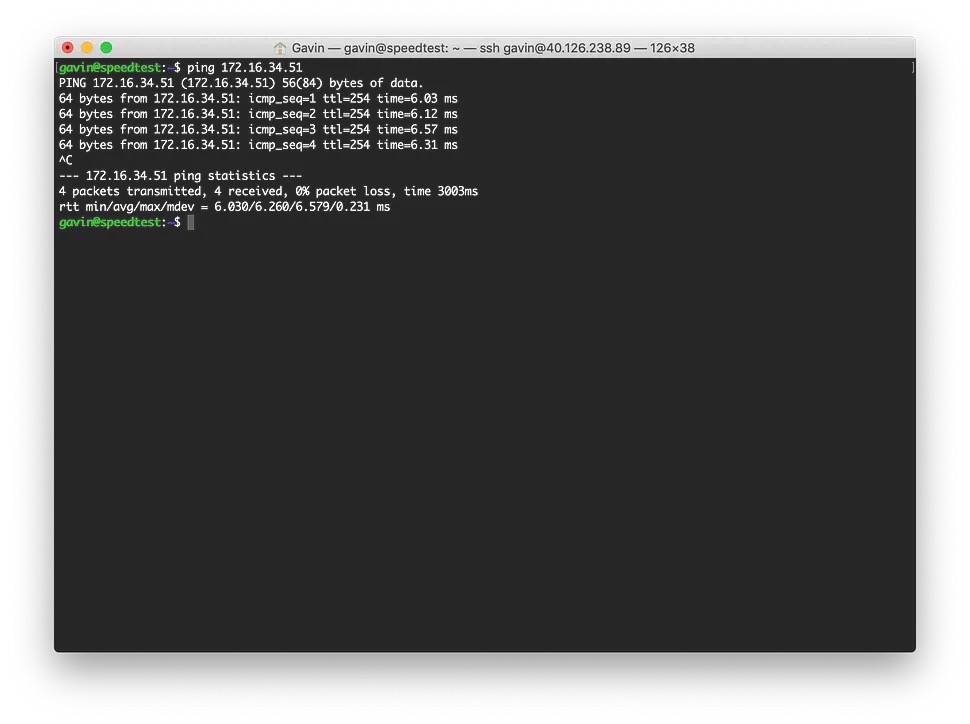

Before testing — make sure you have allowed AWS' and Azure's internal IP ranges to security groups and ACLs to both cloud providers. This will ensure traffic can get through to the infrastructure at either end. First up, a simple ping test to see if we have connectivity; ~7ms isn't bad!

Left: AWS, Right: Azure

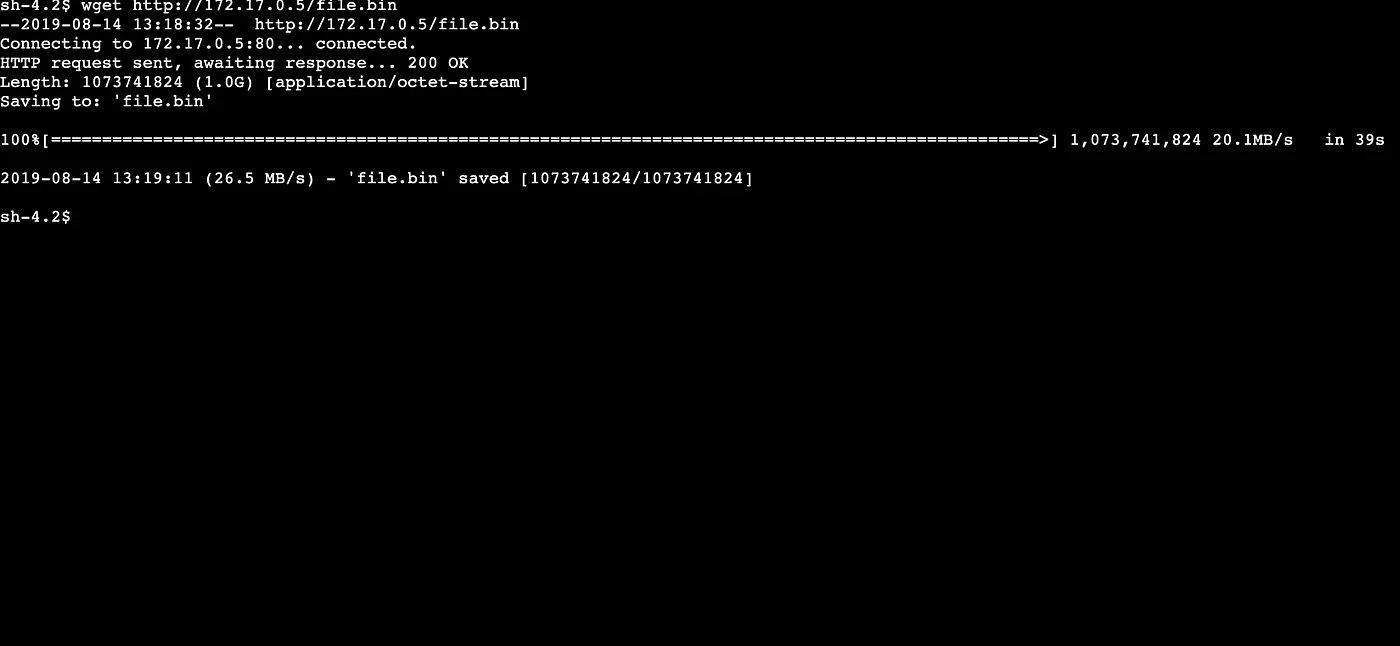

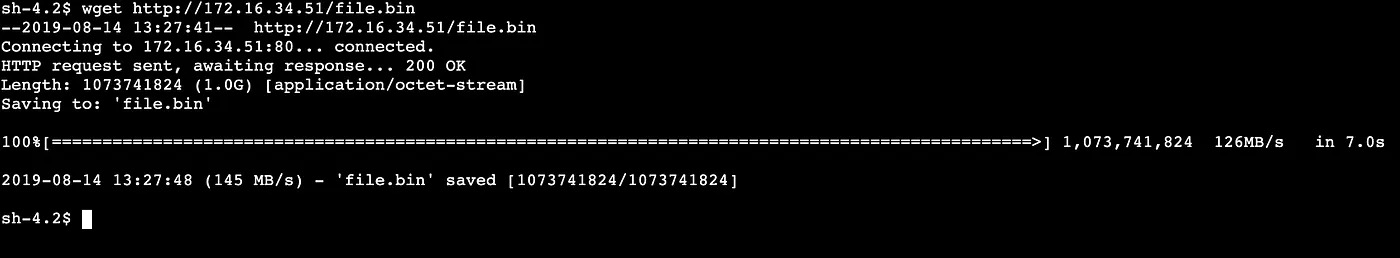

Let's take a look at throughput — I created a simple apache web server on a virtual machine in Azure and loaded a 1GB binary file to it. Keeping in mind on AWS I'm only using t3.nano instances, and Azure B series virtual machines.

Transferring the 1GB file from Azure to AWS completed in 39 seconds with an average of 26.5MB/sec. Not bad, considering the advertised throughput of the basic SKU was benchmarked at 100Mbps by Microsoft. AWS state their max throughput is 1.25Gbps.

EC2 instance on AWS

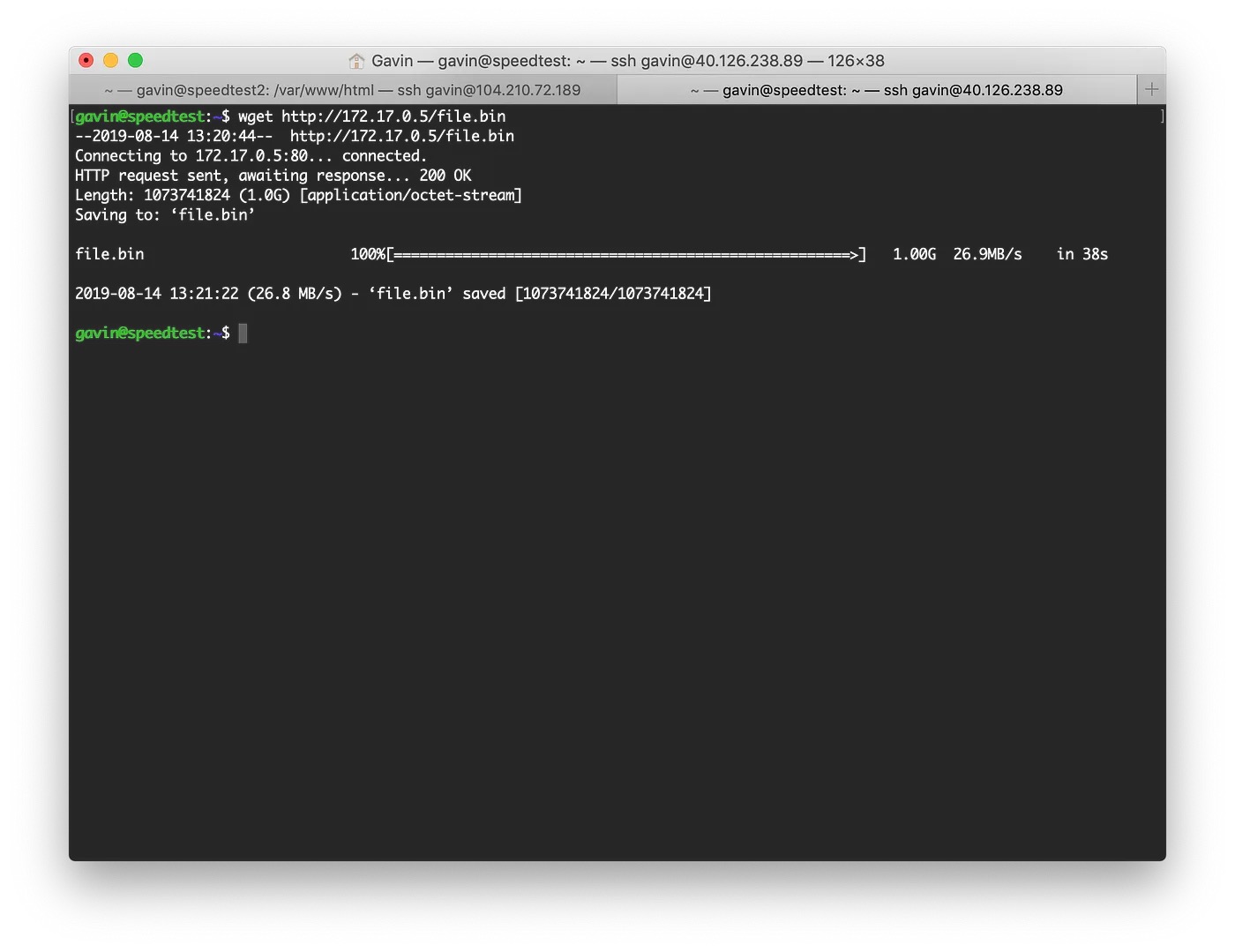

To do a comparison to see how our VPN fared, I performed the same transfer but this time Azure to Azure — with similar results. Looks like the virtual machines, in this case, are the bottleneck.

Virtual Machine on Azure

As a comparison, I performed the same test with AWS to AWS. The new burstable t3 series instances have far superior transfer speeds for the price point than the Azure B series virtual machines do.

EC2 instance on AWS

Cost Analysis

I've priced this in all in USD for a direct comparison, in ap-southeast-2 (AWS) and Australia East (Azure).

VPN Gateway Pricing

- AWS VPN: $0.05/hour, $36/month

- Azure VPN Basic: $0.04/hour, $28.80/month

Depending on your requirements (e.g., need more throughput than 100Mbps) on Azure, there are additional pricing tiers:

- Azure VPN VpnGw1: $0.19/hour, $136.80/month

- Azure VPN VpnGw2: $0.49/hour, $352.80/month

- Azure VPN VpnGw3: $1.25/hour, $900/month

Although Azure's pricing gets more expensive as you step up beyond the basic tier, you can create multiple connections under your Virtual Network Gateway. On AWS, you need to create a new VPN Connection, resulting in an additional charge of $0.05/hour per connection and are limited to the 1.25Gbps across all connections combined.

Both providers charge comparable outbound data rates for VPN connections (inbound is included — free).

Conclusion

Both providers are relatively easy to get a VPN up and running on. My biggest gripe with Azure is it takes so long to provision the Virtual Network Gateway.

I haven't benchmarked what the performance of running database connections over the VPN would look like, though 7ms latency may be just too high. That being said, there are lots of other practical use cases such as operating one provider as a total failover, providing connectivity to private APIs or messaging queues for transporting data to different internal systems or microservices.